Abstract

A common kind of dementia, Alzheimer’s disease (AD), is characterized via decline in memory and mental decline. Alzheimer’s The, a progressive neurological disease, which poses difficulties in early diagnosis, which is essential to an effective intervention. Traditional AI methods like deep learning and machine learning have demonstrated encouraging outcomes in identifying AD biomarkers and predicting disease progression. However, their “black-box” nature restricts their ability to utility. This review emphasizes how crucial accessibility is to Models of AI, allowing clinicians to understand, trust, and interpret AI-driven predictions. We examine various XAI techniques like based on rules design attention mechanisms, as well as model-agnostic (model independent) approaches, focusing on their role in analyzing neuroimaging data, genetic factors, and cognitive assessments. Additionally, we discuss the XAI’s incorporation into clinical procedures and the potential for personalized medicine in AD care. The review concludes by addressing the difficulties along with possibilities for XAI enhancing the precision, transparency and clinical acceptance of Alzheimer’s Identification systems.

Keywords

Alzheimer’s disease, The concept of Artificial Intelligence (AI), Deep learning as well as machine learning. Type of XAI method , Future direction and opportunities.

Introduction

Alzheimer’s disease (AD) constitutes a noteworthy contributor to dementia, being recognized as one of the principal etiological factors(1) This condition is marked by a advanced deterioration in mental illness faculties, like consciousness, logical thinking, along with cognitive interaction. It’s predominantly observed in the elderly population, exerting considerable repercussions on individuals impacted as well as their family members and caretakers(2,3) AD represents a substantial public health concern, escalating into a health crisis, with projections indicating a notable rise in the incidence of cases as the global demographic age(4) The hippocampal region serves as one of the most well-established and primordial indicators of Alzheimer’s disease(5) The pathological condition of atrophy leads to the reduction in size of the hippocampal region, which is integral to the process of memory creation. As AD progresses, it is common for cortical manifestations to manifest. Thinning and disruptions in white matter are suggestive of the later stages of Alzheimer’s disorder(11) These structural characteristics facilitate the support of the neurological architecture. The alterations in brain structure underscore the crucial significance of early detection in the management and alleviation in the disorder effects (6) The prompt detection of AD is vital as it permits the initiation of early intervention strategies, aimed at postponing the development of signs and symptoms, improving the Qualities of life along with providing individuals as well as their respective households with additional the time for prepare for more challenge(7,10)Additionally, an early detection allows for the application of optimal therapeutic interventions at the most favourable junctures, potentially resulting in beneficial outcomes that can delay the emergence of more severe symptoms(8) The MRI imaging of an average brain, a cerebral exhibiting mild cognitive decline (mild cognitive impairment), as well as a brain afflicted through Alzheimer’s disease are illustrated in Figure 1 (9)The average brain depicted appears to be structurally sound, including gray matter(GM) levels remaining well-preserved. Conversely, the brain impacted by Alzheimer’s disease on the right exhibits a significant reduction in GM volume, especially in areas that are important for memory and cognitive function, which correlates with the expansion of certain areas because of the atrophy and and loss of brain matter.. In the cases MCI, an intermediary reduction in the volume of GM is visible situated between the both extremes.(1)

Fig 1: T1-Weighted sequencing of MRI imaging contrast the brains of people with those without the diseases.

Artificial Intelligence (AI)- Based Alzhimer’s Disorder identification

Artificial Intelligence (AI) is also playing an integral role within the identification as well as diagnosis of dementia caused by Alzheimer’s. Thereby, applying Machine and Dee Learning (13,16) to analyze such intricate medical information, comprising of brain scanning (MRI and PET) and genetic tests (1) AI finds the possible signs and variations that humans can’t in early phases of Alzheimer(12) AI processing allows for the analyzing of large datasets and can help in the detection of biomarkers, predict disease development, or provide early diagnosis (11) AI can also study speech results and cognitive tests to identify people experiencing early cognitive decline. AI, with its enhanced ability to improve diagnostic accuracy(14) helps in early intervention while offering personalized treatment strategies for enhancing patients therapy and the outcomes in the management by Alzheimer’s disease(47)

XAI’s Role in Clinical Settings

Explainable Artificial Intelligence XAI also is essential to raising the quality of XAI in clinical practice, particularly for Alzheimer’s disease (AD) identification, leading to improvement in diagnosis along with management trust on AI -based medical tools(19) .Dementia is a complex neurodegenerative disorder accompanied by gradual mental deterioration, and quickest assesment plays a vital role in successful treatment(20) Although (16) AI models, particularly deep learning methods, are often considered “black boxes” that possess poor transparency, this makes It’s challenging for physicians to comprehend how an identification is established. In order to diagnose AD, explainable artificial intelligence (XAI) is essential.Explainability is the capacity of AI models(16,17,24) to offer clear and intelligible justifications for their choices. Accessible AI is currently making progress with tools such as Local Interpretable Model-agnostic Explanations (LIME) and Shapley additive explanations (SHAP)(18)

Progression of XAI for Improved the clinical decision-making

The potential for improvement in clinical decision-making by advancing XAI Regarding the detection of dementia or Alzheimer’s, very high (21) Dementia is a complex neurological conditions, and its diagnosis can be quite challenging to establish in its early stages(15,22)Current methods of diagnosis include imaging and neuropsychological tests, which are valuable but can take a lot of time. and subjective With the application of XAI in a clinical setting, health professionals will gain better insight into the reasonings behind AI-driven diagnoses and treatment recommendations, ensuring that decisions become more accurate and reliable(27)It has the potential to increase transparency in machine learning models that deal with deep learning, mainly about brain scans or patient information(23) XAI provides explanations which makes it easier for clinicians to understand how specific features, including changes in brain volume and biomarkers, contribute towards a prediction made by the AI model. This helps build more confidence into an AI tool ,and consequently ensure more informed decisions from medical experts. Furthermore, XAI can uncover patterns and insights in large datasets, which can help in early identification and customized treatment strategy(24) For Alzheimer’s disease this can mean identifying at-risk individuals earlier or recommending targeted interventions tailored to individual needs(48) Finally,(1) XAI advancements in Alzheimer’s disease detection improves medical outcomes and fosters a more collaborative relationship between AI systems and healthcare providers in delivering patient-centered care

Methods for Detecting Alzheimer’s Disorder

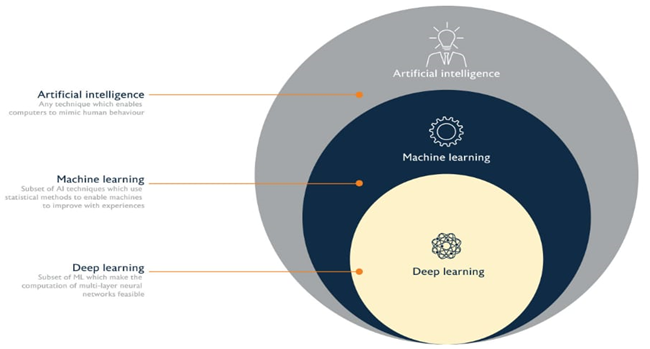

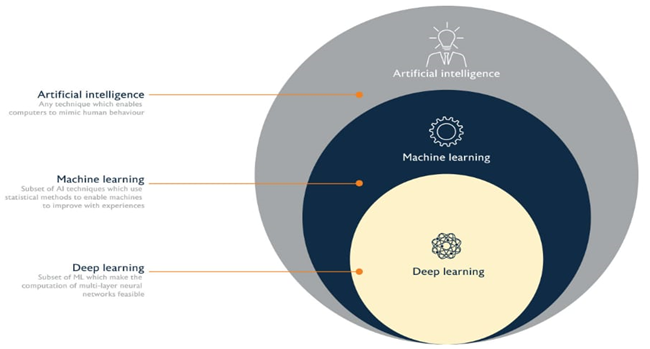

Artificial intelligence encompasses methods which simulate human abilities like training, organizing, reasoning along with predicting (25) In this comprises natural language preparation, machine learning, and computational vision. Artificial Intelligence (AI)is essential in advancing AD diagnosis, as this field is regarded as among the greatest notable use for artificial Intelligence within the Medical Sector. Artificial intelligence strategies have the ability to examine intricate anomalies in MRI images with precision that surpasses human potential. Such models are capable of recognizing minor alterations in the hippocampus, which has been proven to be significant in identifying AD in animal studies (26) AI is also equipped to process extensive sets of patient data, which include clinical information, lifestyle aspects, as well as treatment results, and is able to uncover feature as well as forecast the shift between MCI and AD (27,6).Additionally, AI is capable of deliver comparatively elevated efficacy within spotting & tracking Alzheimer's disease with the use of speech recognition systems and evaluation of natural languages(28,49) The success of Using artificial intelligence (DL models) detecting minor differences in face cues linked to AD has become validated on (29). It is study examined fundus images regarding AD analysis (30) employing neuronal network-oriented approaches through creating a foundation that integrates CNN models using neuroimaging and hierarchical methods of clustering

Conventional Machine Learnings

Conventional Machine Learning (ML) refers to traditional ML techniques that have been widely used for decades(1,31) Conventional artificial intelligence approaches to Alzheimer's disease diagnosis rely mainly on extracting features from data in medical images, cognitive tests, and clinical assessments(31) These methods include identifying alterations in the brain structure or the metabolism that is indicative of Alzheimer’s Disease (AD)(32) through scan-derived properties like PET or MRI scans(33) The most common approach to learning patterns associated with Alzheimer’s is supervised learning, using labeled datasets, i.e., data with known outcomes, in which algorithms are trained. Among the techniques used here, SVM, k-NN and Random Forests are a couple of the popular ones.(34) These models will classify brain images or patient data based on the extracted features as belonging to a classification, like "AD" or "Healthy,"using hippocampal volume, cortical thickness, or regional glucose metabolism(50) Feature selection is the major requirement, as high-dimensional data such as brain scans also consist of irrelevant information. As such, PCA and LDA are two examples of dimensionality reduction algorithms. are used for dimensional complexity reduction.(35) Apart from imaging data, machine learning models also incorporate cognitive test results, such as memory and attention assessments, and demographic data. These models, after training, can help with the early diagnosis of Alzheimer’s, which will be beneficial for clinicians to detect early decline before it progresses to more serious stages.Different traditional machine learning methods are categorized for both supervised and unsupervised learning(36,37)Here is a review of a few of the most popular machine learning algorithms:

Fig 2 : Domain of Artificial Intelligence AI

Support- Vector Machine

A Support vector machines is a strong Machine learning techniques are commonly utilized for applications including regression, recognizing anomalies, and both nonlinear as well as linear classification(38) The SVMs are very versatile; hence they can be used in many different of functions like text categorization, Picture categorization, spam filtering, Penmanship recognition, facial recognition, abnormality detection, and genetic expression analyzing(40) Support vector machines (SVM) are even more efficient due to their attempt to find the greatest amount of separation hyper-plane of the many targeted features classes, which makes them powerful as well for classification issues including both binary and multiclass(39)Here the paper, the SVM algorithm, its applications with its ability to solve several problems of classification and regression by handling linear and nonlinear problems as well outlier detection problems will be discussed briefly.(40,41) When used to identify Normal control (NC), mild cognitive impairment (MCI), and dementia, the Bayes networks decision-making algorithms outperformed many well-known classification algorithms, including multilayered perceptions neural networks with artificial intelligence, simple naïve Bayesian networks, and logistical regression categorization (LRC)(38)

Linear Discrimination Analysing

The linear discriminating analysis is analytically method for categorization that reduces dimensional by separating two or more data classes through a linear combination of features. LDA Optimize the variance while reducing variation within classes by establishing a discriminant function for predicting the class of new instances(42) Fischer Linear Discriminating, another name for Linear Discrimination Analysis (LDA), is the most popular method for reducing size(43). In LDA, it is presumed that the knowledge for Every class has the same covariance matrix and is distributed normally. Consequently, LDA proves to be highly efficient when classes are distinctly separated and features adhere to a Gaussian distribution. LDA is utilized in areas like face recognition, medical diagnosis, and pattern recognition(44) The method decreases mapping high-dimensional data to determine dimensionality onto a space with fewer dimensions maintaining the separability of classes(45,46) Consequently, LDA acts as a precious asset not only to classification tasks but also as an exploratory instrument for visualizing intricate data configurations in a more understandable format.

Naive the Bayesian approach

Considering the Bernoulli principle, the Naïve Bayes algorithm is a classification method used in data analysis & algorithmic learning.It was applied to calculate an attribute’s probability based on other known probabilities associated with the attribute. It models data using Gaussian probabilities density levels(51)The naively Bayes independence models provides an expectation of independence of the observable (little ones) and undetected (parents) nodes in created acyclic maps, which are used for naïve Gaussian classification algorithms, which are Bayes networks(52,39)The accuracy of Bayesian classification algorithms is typically lower than that of another, more complicated approaches to learning (like artificial neural networks (ANN)(41)Although an extensive analysis of the Bayesian categorization by Naïve algorithm utilizing sophisticated technologies that rule induction, instance-based training, as well as reasoning tree activation in common benchmarks revealed that perhaps were superior to the additional training strategies, particularly for databases via significant characteristics dependence(41,53)

Decision Trees

Decision trees are a supervised learning designs that is utilised in categorisation and regression problems. In analysis data, statistics, and Machine Learning( ML) functions, the decision trees method acts as a popular forecasting method for categorization. The data gains outcomes for each dataset characteristic are calculated in order to Classify t he dataset In the instance of a tree, the extensions of the leafy nodes indicate the combination of input variables which result in those labelled classes, whereas the leave terminals themselves indicate the category labelling(51)Decision Trees (DT) are forests that utilize value of features to assign entities into different classes. Every path of a tree of decisions denotes an attribute a specific nodes may adopt, as well as every node represents a feature in an instance that needs to be categorized.(41. After decision forests have been trimmed utilizing a validation set of decisions, classification systems based on decision trees typically use after trimming procedures to assess the decision trees’ effectiveness. It is possible to delete every node & attribute it to a particularly prevalent class of the training instances that are organized to it.(41,53).

K means clustering

One unsupervised learning strategy uses unlabeled test datasets, one being clustering(55) Hierarchical Clustering relies upon the following technique as a basis for constructing a hierarchy using the agglomerative and divisive clustering techniques. Agglomerative clustering involves collaboration; starting from bottom up. A large cluster is split into smaller ones in Divisive Clustering starting from the top down. Creating Divisions Using the clustering method, datasets can be divided into equal or unequal groupings where each grouping is described by a cluster mean. In K-means clustering, the data set gets divided into K small groups, with every one group being referred to by its respective cluster mean (56)

Fig 3 : working of the K means clustering Algorithm

Analyzing Principal Components

A technique for reducing dimensions is primary component analysis, which maps characteristics into reduced dimensions area. Both nonlinear as well as linear data conversions are possible. It popular linear conversion is Principal component analysis, which is a reverse transformation used to convert conceptually linked datasets into practically unrelated elements(57) Thus converting multidimensional information into low-dimensional information Principal component analysis is employed for diminishing the number of dimensions(39).

Fig 4 : Steps involved in principal component Analysis

Networks of Neurons (NN)

The theoretical framework of biology of neuron served as the basis for a network of neurons, also referred to as an artificial neural network (ANN).Three levels makeup this stucture : Input , Hidden and Output layer(58)

In a Neural Network:

Input Layer : Accepts the input features and forwards it to subsequent layers.

Hidden Layers: They process and learn patterns based on the input data in weighted sums and activation functions.

Output Layer: Generate the final prediction or the output based on the pattern learned, often using the activation functions like softmax, in case of classification tasks, or linear, if the task is regression(58) While typically each network only completes one, Recognized Neuronal Networks (NN) are capable of doing several predictive as well as categorizing duties concurrently The 3 primary elements of ANN or artificial neural network are the system’s design, the significance of any input link, & the input and stimulation functions for each component. However, in many-state classification issues, it might equate to several output categories (the conversion to outputs components to output components is handled by the further processing step). In the huge majority of instances, the neural network will consist of a single output component(59) The neural network (NN methodology outperforms conventional techniques in terms of precision as well as effectiveness(58)

Fig 5 : A simple neural network , an illustrative example

Table 1. Compare The Accuracy Of Several Machine Learning Techniques

Deep learning

The use deep learning recently appeared as the popular trend research area in the domain of artificial (AI) the primary drawback of selectivity-invariance issue is the significant drawback of Traditional machine learning, for among this Methods possess restricted ability to handle the information in their initial state. Selectivity-invariance suggests a choice of those characteristics that hold greater information and disregard fewer data-holding parameters, such as chosen features Must be separate from one another. This inspired scientists to progress in machine learning techniques, specifically deep acquiring knowledge. Deep learning, which is occasionally called Representation learning consists of a collection of technologies that easily identifies the categorization or identification required by enabling a machine to receive initial Datasets (60) Deep-learning methods possess various description of tiers through the application of a not-linear framework that Converts the initial data into more advanced degree of abstraction regarding making decisions. It’s makes it easier to locate the answer of Intricate and non-linear equations . Deep learning refers to founded on automated feature learning that offers convenience of versatility as well as knowledge moving. As indicated by the term “deep learning” typically involves intricate structures of hidden layers(61) Studying, deep learning demands a significant range of data on training. The most often utilised deep networks are CNNs (convolutional neural networks) and Network of Recurrent Neural Networks (RNN).fig 6 shows Deep learning , in contrasrt to conventional machine learning, needs a lot to gather data to teach a network.

Fig 6: A deep Network Architecture

CNNs (convolutional neural networks)

CNN is the most extensively utilized deep learning method that relies on the visual cortex of the animal(62) CNN is presently Widely utilized in object identification, object monitoring, text identification and detection, visual recognition, Pose estimation, scene classification, and in different additional applications (63)CNNs are nearly identical to artificial neural system which are capable of interpreted as a directed graph that is acyclic and has a group of neurons within an organized structure. In CNNs, as opposed to the neural network’s hidden layers consist solely of neurons. Linked to the preceding layer that includes the portion of nerve cells. This form of limited connectivity renders the system capable of implicit learning of characteristics. Every part of a CNN layer includes minimum of two dimensionality classifiers to the input of stratum. Deep convolutional networks produce organization outcomes. Isolation of characteristics(64) Fig: 7 shows the structure of CNN is made up of layers that are dense, pooling, and convolutional.

Fig 7: A typical convolutional Neural Network (CNN)

A Convolutional Layers–

This component serves as the main element of CNN where the majority of calculations take place. These layers are made up of an configuration of neurons in addition to a collection of Characteristic chart. Each of these layers contain filters (kernels) that are employed to participate in attributes for generating an individual 2-D Activation map depicted in(64) as shown in fig :8 The network’s Complexity is minimized by retaining fewer features through the distribution of weights among the neurons(65) The CNNs are guided by Back propagation that includes convolution Process involving filters that are spatially inverted. These strata are utilized Convolution operation combined with filtering and transmit the Outcome for the subsequent layers.

Fig 8 : CNN’s Convolutional algorithm

Pooling layers –

CNN consist of interchange pooling and convolutional layers. Pooling layers are employed to decrease the dimension aspect of maps of picture activation along with the quantity Of characteristics without any reduction in data leads to Decrease in total computational complexity. Pooling Layers effectively addresses the issue resulting from Overfitting. Mean pooling, max pooling, random Pooling(66) spatial pyramid pooling , spectral pooling and multiscale pooling are among the frequently Employed pooling operations(67)The max pooling operation is demonstrated is shown in fig : 9

Fig 9: CNN’s pooling algorithm

Fully connected layers

In deep learning, a fully Connected Layer (FC Layer), also referred to as a thick coating(Dense layer),is a layer where every neuron is linked by every other neuron in the previous layer completely linked layers links each of the neurons in a single layer to each neurons in the Next layers similar to NN(68) It transforms the feature map with high dimensions to a single dimension through advanced reasoning that provides the Likelihood of a feature being associated with a particular class. Certain portions of the recent developments have created approaches to entirely replace Interconnected layers like “Network In Network” (NIN) architecture(69) fig 10 shows a fully connected (FC) network layer

Recurrent neural network (RNN)

RNNs are utilized for handling tasks that entail consecutive inputs like content and speech. At first Backpropagation was employed for the training of RNNs. Recurrent Neural Networks (RNNs) handle a single component of a succession of inputs sequentially with Preserving state vectors within their concealed units in which implicity within units holds details of all the history worth of items in that series. The overall structure the output at various discrete points for RNN is depicted In fig 11 Times steps of concealed neuron that are the results generated by every neuron in a deep multilayer network, it turns into it is evident how we can utilize backpropagation to train RNNs(70)RNNs are highly effective and adaptable systems, yet the Issues that arise throughout the tranning process as in the gradients in the backpropagation algorithm could either increase or diminish every each step, leading to their disappearance after numerous time steps When expanded, RNNs can be interpreted as deep forwarding feed system(71).Networks that assign an equal weight across all the layers the challenge in RNN is maintaining previous data for a prolonged duration, indicating enduring relationships. To succeed against This specific technique equipped with memory that is explicitly extended brief-Long term short-term memory (LSTM) method that employs distinctive hidden Elements or parts to maintain the characteristics in the form of input for a prolonged duration LSTM delivered considerable improvements Efficacy in voice recognition technologies(72)

Fig 11 : RNN’s layered architecture

XAI Methods for Alzheimer’s Detection

Explainable AI (XAI) techniques are being more frequently utilized for Alzheimer’s disease identification, improving the readability and clarity model for ML(Machine Learning). Conventional AI models, though precise, often act as “black boxes,”making the decision-making process hard for clinicians to comprehend . Explainable Artificial Intelligence (XAI) technique, including SHapley Additives an explanation, or SHAP, Model-Agnostic Local Interpretable Explanations, or LIME and attention mechanisms, offer understanding of the main factors influencing predictions. These techniques assist in pinpointing particular brain areas, biomarkers, or patterns that signal Alzheimer’s, enhancing confidence in AI-assisted diagnostics and aiding healthcare providers in making decisions for early identification and tailored treatment(1)

S Hapley Additives an explanation, or SHAP

To give every characteristic in the input an involvement scores that represents its influence on the outcome of the model, SHAP coefficients are used. SHAP analyses offers a measurable indicator of the impact of specific MRI imaging aspects or as portions in the context of Alzheimer’s disorder forecast. This approach facilitates the identification of the key elements influencing the framework’s judgment, leading to a comprehensive understanding of the fundamental connections along with connections in the data.(21)

Model-Agnostic Local Interpretable Explanations, or LIME

In order to achieve accessibility, LIME makes an estimate of the model’s determination border about a particular case, taking a model-agnostic technique. It creates a regionally interpretable models by altering the input’s properties and observing changes within the forecasts. When used to forecast Alzheimer’s disorder, LIME sheds illumination on how even little changes to the provided variables affect the categorizing .This approach provides it simpler to comprehend the framework’s decision-making process in greater depth, especially for complex neural network architectures. These Explainable Artificial intelligence methods provide a comprehensive viewpoint on the Alzheimer’s disorder detecting method when taken as a whole. Gradient CAM highlights pertinent areas of the image through spatial representation, whereas SHAP and LIME contribute quantifiable information about the significance of features including regional interpretation(21)

LAVA, or A granular Neuron-Level Explainer

LAVA is a sophisticated artificial intelligence method that concentrates on neuron-level justifcation , as seen by its application in the identification of Alzheimer’s disease through retinal imagery(30)In order to determine which neurons are of greatest significance for a forecasting, LAVA explores particular responses of neurons employing attentive techniques. Deep neuronal networks’ internal workings can be better understood because this involves layer-level examination of variability, which enables a more comprehensive description of capacities. This approach is especially helpful for complicated assignments where professional trust depends on clinician the characteristics that contribute toward a certain forecast. This degree of comprehensibility guarantees that medical professionals may rely on artificial intelligence (AI) systems to aid in diagnosis or track the course of a medical condition In a deeply learning networks, forecasts from models might be connected to particular neurons because of LAVA’s neuron-level comprehensibility which could increase the artificial intelligence technique’s dependability

Types of XAI method

Artificial intelligence (XAI) techniques try to increase the clarity as well as openness regarding artificial intelligence algorithms’ decision-making processes by disclosing information about their decision-making approach. These strategies are crucial to establish trust, making the people understand what AI does in predictions correctly. Techniques for XAI generally fall under two broad categories: both Model-specific and XAI’s model-agnostic technique. specific to a model techniques give explanations according to the internal workings of an AI model and are connected with their architecture. XAI’s model-agnostic technique, however, are more flexible and applicable to any type of an hypothesis irrespective of concerning its construction. Each of them approaches possess their advantages as well as disadvantages; XAI’s model-agnostic technique, provide greater flexibility, but model-specific method deliver higher accuracy. Additionally, XAI methods may be classified as post hoc, where explanations are provided either intrinsically or as soon as the algorithm has been fully developed, where the algorithm can be interpreted by its very creation. The specific region looks at the mechanisms, functions as well as compromises among accessibility, adaptability, and precision associated with various artificial intelligence (XAI) methodologies(1) fig 12 shows categorizing XAI techniques and the associated groups.

Fig 12 : categorizing XAI techniques and the associated groups.

Model-Specific artificial intelligence (XAI) Methods

Approaches that are Specifics to models are significantly linked by the design of AI architectures and are intended to exploit particular characteristics or capabilities inherent in that design. These methods provide improved integration with the model's framework but primarily emphasize machine-learned characteristics, which might not consistently correspond with domain-specific insights, particularly in clinical environments. For instance, Grad-CAM is methods are frequently working in convolutional neural networks (CNNs) to highlight areas in an image that are influenced by the algorithm’s choices, presenting a glimpse through the network's interior mechanisms. A model outlined in(73)utilizes techniques such as Grad-CAM technology with analysis of principal components to analyze the layers of a neural network and reveal its decision-making mechanisms. Nonetheless, the primary disadvantage of methods specific to models is their inability to transfer. These techniques are typically customized for certain architectures and might not work with different models.Not withstanding this restriction, techniques tailored to specific models can prove to be very effective in Explainable AI (XAI), especially when the explanations accurately represent the model's internal workings. For instance, the VGG16 model, when utilized with transfer learning to identify Alzheimer’s illness early, produces precise along with comprehensible predictions due to its architecture(74) Although these methods provide precise as well as customized perspectives, but they don’t apply to all algorithms universally.

Drawbacks of Model-Specific XAI

Although XAI techniques tailored to specific models offer detailed and pertinent explanations for those models, their black-box nature can make them challenging for people to grasp completely. This uncertainty indicates that, although a model's internal mechanisms can be intricate as well as challenging to understand, the clarifications produced are customized to the particular construction, and don’t always expose the general reasoning behind choices. Moreover, intricate relationships among model parameters can hide the reasoning behind recommendations, which makes it challenging for people to comprehend the basis for specific predictions. Furthermore, these strategies are only somewhat applicable to algorithms with varied architectural designs as they heavily rely upon the model's framework. For example, Grad-CAM is most effective with CNNs but might be less impactful when applied to RNNs, or Recurrent Neural Network s, or alternative algorithms with varying functional architectures. Because of its restricted versatility, those methods are able to offer profound details about one algorithm yet struggle to apply universally across various Artificial Intelligence technologies. Moreover, due to their depend on particular layers & functions inside the framework, architects frequently must develop tailored remedies Regarding various algorithms that are capable of consume significant resources. This is illustrated using the version VGG16(74) in, different strategies are necessary. once dealing with various architectures, additionally though the method yields precise perspectives due to the unique characteristics of the framework configuration.

XAI’s model-agnostic technique

Model-agnostic techniques refer to strategies that can function regarding any artificial intelligence (AI) framework, irrespective of its structure. Such approaches clarify how different variables influence the output of a model, allowing them to become applicable throughout different algorithms in which the any modifications. Model-Agnostic Local Interpretable Explanations, or LIME is one technique that produces justifications by assessing the algorithm’s actions in particular instances. The additional instance is SHAP, which quantifies the role that every attribute plays in a forecast.. These methods are commonly utilized due to their adaptability. Studies have demonstrated the utilization of LIME and SHAP across different theories to assess their dependability(75) Nevertheless, the drawback is that since these approaches do not utilize the model's internal workings, their explanations may lack the precision of those from methods tailored for particular models. This wide-ranging method may occasionally result in explanations that are less accurate, particularly when dealing with more intricate models.

Difficulties with Model-Agnostic artificial intelligence (XAI)

Methods that are model-independent, like LIME and SHAP are valuable due to their versatility,however, It additionally come with important disadvantages. At times, the explanations of these techniques can be vague since they’re not tailored to the internal organization of a model. These issue is particularly evident while employing complex algorithms, as extensive model-independent strategies might miss the delicate relationships between characteristics or layers. For example, the research in utilized LIME in order to illustrate the MobileNetV3 algorithm’s forecasts; however, it pointed out a possible inconsistency among the provided Justification as well as the real choice- creating the algorithm by recognizing not the brain areas as significant(76)Those might be deceived through this inaccuracy occur when unimportant traits are wrongly identified as significant. In addition,when utilized for more intricate AI models, these techniques can yield general explanations ions that fail to encompass the intricacies of the model’s algorithm for making decisions as they do not leverage specific characteristics of the architecture of the model

Model-specific vs. model-agnostic approaches

Techniques specific to a model are techniques designed for a particular machine learning model or algorithm. These methods utilize the internal model mechanisms as well as assumptions to improve its interpretability or effectiveness. For instance, decision tree algorithms frequently utilize tree visualization techniques for clarity. Conversely, model-agnostic methods do not rely on the particular model employed. These techniques may be utilized any model for machine learning, irrespective of its architecture, to examine its actions or explain its forecasts. Examples consist of Shapely Additive Explanations (SHAP) & Local Interpretable Model-Agnostic Explanations (LIME), that are applicable to different models(75,76)

Challenges in implementing XAI

There are various obstacles to overcome while implementing XAI for Alzheimer’s disease detection. A significant obstacle is the lack of big, excellent data sets,(67)since the investigation noted that there is insufficient data to support ML models, particularly when employing DTI data and white matter features as biomarkers. The text also(74)highlighted the necessity of employing additional datasets to further confirm the excellent precision attained via the VGG16 model, highlighting the necessity of huge amounts of information for reliable XAI to guarantee the correctness and generalization of perspectives. A common issue is striking a balance between the requirement for accessibility and the accuracy of intricate models. But striking this balance is challenging, as shown by the findings(76) where LIME identified non-cerebral zones as significant, casting doubt upon the accuracy of ensuing assessments in extremely accurate but intricate models like MobileNetV3.Another significant obstacle is integrating XAI from investigation into therapeutic settings.

Intrinsic technique

This kind of model is designed to be easily comprehensible. Classical models, like models of linear regression, can be understood in this situation despite having a comparatively simple framework. In this case, a model offers the response and the language interpreter’s accompanying description(77)

Post-hoc Explaining

The comprehensible data gleaned from outside techniques during model analysis (such as a network of neurons once it has received training) is associated with post hoc explaining. Examples of post hoc strategies for interpretation include layer-wise relevant dissemination, back propagation(78)& classes activating mappings

XAI’s function in identifying Alzheimer’s disease

Artificial Intelligence Explainable (XAI) is essential in identifying Alzheimer's disease (AD) as it improves the clarity and understanding of machine learning models utilized for diagnosis(7) Conventional AI models, though efficient, frequently function as "black boxes," rendering it challenging for healthcare providers to rely on and comprehend the rationale behind decisions. XAI tackles this problem by offering explanations for how judgements are made by AI models, thereby enhancing their applicability in clinical environments. XAI can help identify important biomarkers using imaging data, genetic details, and cognitive evaluations in the diagnosis of Alzheimer’s disease(24)By clarifying the rationale behind forecasts, XAI aids healthcare professionals in comprehending which particular traits, like brain patterns or specific genetic indicators, signal early-stage Alzheimer's. This clarity boosts trust in AI-based diagnostics, enabling prompt actions(74,76) Additionally, XAI fosters patient-centered care by guaranteeing that diagnostic models correspond with clinical knowledge, enabling healthcare professionals to validate AI outcomes with their own insights(75)It also aids in regulatory endorsement and implementation by showing that AI instruments are secure, trustworthy, and stable. In summary, XAI is crucial for creating AI-based approaches to Alzheimer's disease, enhancing both the precision of diagnosis and the reliability of these sophisticated technologies in practical clinical settings.

Future Directions and opportunities

There are a lot of exciting opportunities to develop explainable artificial intelligence in the detection of AD. Developing methods for interpretability that offer more precise insights into the decision-making processes of intricate artificial intelligence (AI) models is one such avenue. Although techniques like SHAP, Grad-CAM, and LIME have significantly improved model clarity, they are still not very good at capturing the intricacy of the analysis of medical images (73,75). It is crucial that AI technologies offer both clinically useful interpretations and precise predictions, as medical professionals depends more and more on these tools to aid in treatment.More precise AI techniques that can decipher multimodal data, including magnetic resonance imaging (MRI )PET (positron emission tomography )and medical biomarkers, will significantly improve diagnostic precision and trust in AI-driven judgments (8,81).However, it is challenging to decode such complex models, therefore future research should focus on developing AI techniques that can effectively handle multi-modal input(74,76). To create AI methods that work in various environments without compromising accessibility, a great deal of study is required (27). Making these sophisticated models understandable to non-experts in hectic medical settings is the most difficult part, though. Although prior research has examined XAI's ability to increase diagnostic precision, this assessment offers a novel perspective by putting out the "Feature-Augmented" justification as a solution focused on the future This kind of explanation tackles the black box problem in current artificial intelligence (XAI models, which is something that current approaches are unable to adequately address

Summary and challenge

The variety of details utilized to identify Alzheimer’s disease significantly improves XAI systems’ capacity for developing and making decisions. Due to the shortage of extensive data sets, one of the main drawbacks of the algorithms that are currently accessible is their inability to provide comprehensive Interpretations (1) Interpretation and classification and forecasting efficiency are traded off since existence model interpretation are not just black boxes but are also generally regarded as fewer precise or efficient than more sophisticated data-based techniques.(79)AI systems may enhance the precision of their forecasts with more data. Although with larger sets of data, the procedure performance usually decreases. Parallel methods of deep learning are being put out as an efficient remedy for this issue(80) When utilizing such strategies on massive datasets resulted in considerable speeds up. Even with the advancements in XAI algorithms for Alzheimer’s disease detection, accessibility is still a significant obstacle. In future artificial intelligence (XAI) algorithms to be used effectively in therapeutic settings, it is imperative that they offer intelligible interpretations. The difficulty lies in using XAI technologies to give precise descriptions. There areconcerns regarding the validity of the justifications provided by current Alzheimer’s disease identification techniques, which highlight non-brain regions as crucial in making decisions.

CONCLUSION

XAI has the potential to greatly Improving the accuracy of diagnosis as well as the assurance of primary care physicians and non-experts in recognizing Alzheimer’s Disease (AD)when used in busy medical environments. Through offering clear justifications for models of artificial intelligence, In artificial intelligence (XAI) facilitates the broad public and non-experts to reach better-knowledgeable conclusions when lacking of experts. This is especially useful in hectic clinical environments, where the capability accurate diagnosis is essential. A "black box" in XAI denotes a model that produces choices made not including offering any specific reasoning or understanding about the way those choices were attained. Although existing XAI models, including model-agnostic and model-specific types, provide different levels of explainability, they continue to encounter difficulties in completely clarifying the decision. The development of intricate AI models renders them as black boxes. This evaluation adds to the continuing discussion by offering a thorough summary of existing AI methods and pinpointing directions for upcoming studies. An essential contribution of this study is the suggestion of a new kind of explanation for upcoming studies, which we have labelled "Enhanced by features." This method includes clinically significant biomarkers, such incorporates metrics incorporating factors like the thickness of the cortex and volume of the brain onto the interpreting method and showcases them when showcasing the findings, to tackle the drawbacks of "Black box" models. Through visual means by including these medical attributes, the predictions of the model gain greater interpretability. and aligned with clinical comprehension, significantly enhancing the capacity of non-experts healthcare professionals to provide precise diagnoses. Integrating feature-enhanced into the me work flow could improve the dependability of AI-based diagnoses, rendering them an essential instrument in enhancing patient results in the identification of Alzheimer’s Disease

REFERENCES

- Hasan Saif, Fatima, Mohamed Nasser Al-Andoli, and Wan Mohd Yaakob Wan Bejuri. "Explainable AI for Alzheimer detection: A review of current methods and applications." Applied Sciences 14.22 (2024): 10121.

- Parihar, M. S., and Taruna Hemnani. "Alzheimer’s disease pathogenesis and therapeutic interventions." Journal of clinical neuroscience 11.5 (2004): 456-467.

- Fabrizio, Carlo, et al. "Artificial intelligence for Alzheimer’s disease: promise or challenge?." Diagnostics 11.8 (2021): 1473.

- Vrahatis, Aristidis G., et al. "Revolutionizing the early detection of Alzheimer’s disease through non-invasive biomarkers: the role of artificial intelligence and deep learning." Sensors 23.9 (2023): 4184

- Jack, C. R., Knopman, D. S., Jagust, W. J., Shaw, L. M., Aisen, P. S., Weiner, M. W., ... & Trojanowski, J. Q. (2010). Hypothetical model of dynamic biomarkers of the Alzheimer's pathological cascade. The Lancet Neurology, 9(1), 119-128.

- Al Olaimat, Mohammad, et al. "PPAD: A deep learning architecture to predict progression of Alzheimer’s disease." Bioinformatic s 39.Supplement_1 (2023): i149-i157.

- Arafa, Doaa Ahmed, et al. "Early detection of Alzheimer’s disease based on the state-of-the-art deep learning approach: a comprehensive survey." Multimedia Tools and Applications 81.17 (2022): 23735-23776.

- Martínez-Murcia, Francisco Jesús, et al. "Computer aided diagnosis tool for Alzheimer’s disease based on Mann–Whitney–Wilcoxon U-test." Expert Systems with Applications 39.10 (2012): 9676-9685.

- Karas, G. B., Scheltens, P., Rombouts, S. A., Visser, P. J., van Schijndel, R. A., Fox, N. C., & Barkhof, F. (2004). Global and local gray matter loss in mild cognitive impairment and Alzheimer's disease. Neuroimage, 23(2), 708-716.

- Liu, Xiaonan, et al. "Use of multimodality imaging and artificial intelligence for diagnosis and prognosis of early stages of Alzheimer's disease." Translational Research 194 (2018): 56-67

- Kale, Mayur B., et al. "AI-Driven Innovations in Alzheimer's Disease: Integrating Early Diagnosis, Personalized Treatment, and Prognostic Modelling." Ageing Research Reviews (2024): 102497

- Niyaz Ahmad Wani, Ravinder Kumar, Mamta, Jatin Bedi, Imad Rida. "Explainable AI-driven IoMT fusion: Unravelling techniques, opportunities, and challenges with Explainable AI in healthcare", Information Fusion, 2024

- Samek, W. "Explainable artificial intelligence: Understanding, visualizing and interpreting deep learning models." arXiv preprint arXiv:1708.08296 (2017).

- Jo, Taeho, Kwangsik Nho, and Andrew J. Saykin. "Deep learning in Alzheimer's disease: diagnostic classification and prognostic prediction using neuroimaging data." Frontiers in aging neuroscience 11 (2019): 220.

- AbuAlrob, Majd A., and Boulenouar Mesraoua. "Harnessing artificial intelligence for the diagnosis and treatment of neurological emergencies: a comprehensive review of recent advances and future directions." Frontiers in Neurology 15 (2024): 1485799.

- Alarjani, Maitha. "Alzheimer’s Disease Detection based on Brain Signals using Computational Modeling." 2024 Seventh International Women in Data Science Conference at Prince Sultan University (WiDS PSU). IEEE, 2024.

- Jahan, Sobhana, et al. "Explainable AI-based Alzheimer’s prediction and management using multimodal data." Plos one 18.11 (2023): e0294253.

- ousefzadeh, Nooshin, et al. "Neuron-level explainable AI for Alzheimer’s Disease assessment from fundus images." Scientific Reports 14.1 (2024): 7710.

- ?ernevi?ien?, Jurgita, and Audrius Kabašinskas. "Explainable artificial intelligence (XAI) in finance: A systematic literature review." Artificial Intelligence Review 57.8 (2024): 216.

- Haddada, Karim, et al. "Assessing the Interpretability of Machine Learning Models in Early Detection of Alzheimer's Disease." 2024 16th International Conference on Human System Interaction (HSI). IEEE, 2024.

- Vetrithangam, D., et al. "Towards Explainable Detection of Alzheimer's Disease: A Fusion of Deep Convolutional Neural Network and Enhanced Weighted Fuzzy C-Mean." Current medical imaging 20.1 (2024): e15734056317205

- Ishaaq, Namria, Md Tabrez Nafis, and Anam Reyaz. "Leveraging Deep Learning for Early Diagnosis of Alzheimer's Using Comparative Analysis of Convolutional Neural Network Techniques." Driving Smart Medical Diagnosis Through AI-Powered Technologies and Applications. IGI Global, 2024. 142-155.

- Samek, W., Montavon, G., Vedaldi, A., Hansen, L. K., & Müller, K. R. (Eds.). (2019). Explainable AI: interpreting, explaining and visualizing deep learning (Vol. 11700). Springer Nature.

- Bazarbekov, Ikram, et al. "A review of artificial intelligence methods for Alzheimer's disease diagnosis: Insights from neuroimaging to sensor data analysis." Biomedical Signal Processing and Control 92 (2024): 106023.

- Abadir, P., Oh, E., Chellappa, R., Choudhry, N., Demiris, G., Ganesan, D., ... & Walston, J. D. (2024). Artificial Intelligence and Technology Collaboratories: Innovating aging research and Alzheimer's care. Alzheimer's & Dementia, 20(4), 3074-3079.

- Fabietti, Marcos, Mufti Mahmud, Ahmad Lotfi, Alessandro Leparulo, Roberto Fontana, Stefano Vassanelli, and Cristina Fasolato. "Early detection of Alzheimer’s disease from cortical and hippocampal local field potentials using an ensembled machine learning model." IEEE Transactions on Neural Systems and Rehabilitation Engineering 31 (2023): 2839-2848.

- Battista, Petronilla, et al. "Artificial intelligence and neuropsychological measures: The case of Alzheimer’s disease." Neuroscience & Biobehavioral Reviews 114 (2020): 211-228.

- Petti, Ulla, Simon Baker, and Anna Korhonen. "A systematic literature review of automatic Alzheimer’s disease detection from speech and language." Journal of the American Medical Informatics Association 27.11 (2020): 1784-1797.

- Umeda-Kameyama, Y., Kameyama, M., Tanaka, T., Son, B. K., Kojima, T., Fukasawa, M., ... & Akishita, M. (2021). Screening of Alzheimer’s disease by facial complexion using artificial intelligence. Aging (Albany NY), 13(2), 1765.

- Yousefzadeh, N., Tran, C., Ramirez-Zamora, A., Chen, J., Fang, R., & Thai, M. T. (2024). Neuron-level explainable AI for Alzheimer’s Disease assessment from fundus images. Scientific Reports, 14(1), 7710.

- Mirzaei, G., & Adeli, H. (2022). Machine learning techniques for diagnosis of alzheimer disease, mild cognitive disorder, and other types of dementia. Biomedical Signal Processing and Control, 72, 103293.

- Kose, Utku, Nilgun Sengoz, Xi Chen, and Jose Antonio Marmolejo Saucedo, eds. Explainable Artificial Intelligence (XAI) in Healthcare. CRC Press, 2024.

- Subramanian, Karthikeyan, et al. "Exploring Intervention Techniques for Alzheimer’s Disease: Conventional Methods and the Role of AI in Advancing Care." Artificial Intelligence and Applications. Vol. 2. No. 2. 2024.

- Trambaiolli, L. R., Lorena, A. C., Fraga, F. J., Kanda, P. A., Anghinah, R., & Nitrini, R. (2011). Improving Alzheimer's disease diagnosis with machine learning techniques. Clinical EEG and neuroscience, 42(3), 160-165.

- Neeraj, Kumar N., and V. Maurya. "A review on machine learning (feature selection, classification and clustering) approaches of big data mining in different area of research." Journal of critical reviews 7.19 (2020): 2610-2626.

- Abdul Mustapha, Iskandar Ishak, Nor Nadiha Mohd Zaki, Mohammad Rashedi Ismail-Fitry, Syariena Arshad, Awis Qurni Sazili. "Application of machine learning approach on halal meat authentication principle, challenges, and prospects: A review", Heliyon,2024

- Ozgur, A. (2004). Supervised and unsupervised machine learning techniques for text document categorization. Unpublished Master’s Thesis, ?stanbul: Bo?aziçi University.

- Afzal, S., Maqsood, M., Khan, U., Mehmood, I., Nawaz, H., Aadil, F., ... & Yunyoung, N. (2021). Alzheimer disease detection techniques and methods: a review.

- Chauhan, Nitin Kumar, and Krishna Singh. "A review on conventional machine learning vs deep learning." 2018 International conference on computing, power and communication technologies (GUCON). IEEE, 2018.

- Tanveer, M., Richhariya, B., Khan, R. U., Rashid, A. H., Khanna, P., Prasad, M., & Lin, C. T. (2020). Machine learning techniques for the diagnosis of Alzheimer’s disease: A review. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM), 16(1s), 1-35.

- Osisanwo, F. Y., Akinsola, J. E. T., Awodele, O., Hinmikaiye, J. O., Olakanmi, O., & Akinjobi, J. (2017). Supervised machine learning algorithms: classification and comparison. International Journal of Computer Trends and Technology (IJCTT), 48(3), 128-138.

- Fisher, Ronald A. "The use of multiple measurements in taxonomic problems." Annals of eugenics 7.2 (1936): 179-188.

- Wang, Huan, et al. "Trace ratio vs. ratio trace for dimensionality reduction." 2007 IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 2007.

- Zhao, Shuping, et al. "Linear discriminant analysis." Nature Reviews Methods Primers 4.1 (2024): 70.

- Ayesha, Shaeela, Muhammad Kashif Hanif, and Ramzan Talib. "Overview and comparative study of dimensionality reduction techniques for high dimensional data." Information Fusion 59 (2020): 44-58.

- Mallet, Y., D. Coomans, and O. De Vel. "Recent developments in discriminant analysis on high dimensional spectral data." Chemometrics and Intelligent Laboratory Systems 35.2 (1996): 157-173.

- Farlow, Martin R., Michael L. Miller, and Vojislav Pejovic. "Treatment options in Alzheimer’s disease: maximizing benefit, managing expectations." Dementia and geriatric cognitive disorders 25.5 (2008): 408-422.

- Galvin, James E. "Prevention of Alzheimer's disease: lessons learned and applied." Journal of the American Geriatrics Society 65.10 (2017): 2128-2133.

- De la Fuente Garcia, S., Ritchie, C. W., & Luz, S. (2020). Artificial intelligence, speech, and language processing approaches to monitoring Alzheimer’s disease: a systematic review. Journal of Alzheimer's Disease, 78(4), 1547-1574.

- Keith, Cierra M., et al. "More Similar than Different: Memory, Executive Functions, Cortical Thickness, and Glucose Metabolism in Biomarker-Positive Alzheimer’s Disease and Behavioral Variant Frontotemporal Dementia." Journal of Alzheimer's Disease Reports 8.1 (2024): 57-73.

- Shahbaz, M., Ali, S., Guergachi, A., Niazi, A., & Umer, A. (2019, July). Classification of Alzheimer's Disease using Machine Learning Techniques. In Data (pp. 296-303).

- Kuncheva, Ludmila I. "On the optimality of Naïve Bayes with dependent binary features." Pattern Recognition Letters 27, no. 7 (2006): 830-837.

- Domingos, Pedro, and Michael Pazzani. "On the optimality of the simple Bayesian classifier under zero-one loss." Machine learning 29 (1997): 103-130.

- Kotsiantis, Sotiris B., Ioannis Zaharakis, and P. Pintelas. "Supervised machine learning: A review of classification techniques." Emerging artificial intelligence applications in computer engineering 160.1 (2007): 3-24.

- Wang, H., Yajima, A., Liang*, R. Y., & Castaneda, H. (2015). Bayesian modeling of external corrosion in underground pipelines based on the integration of Markov chain Monte Carlo techniques and clustered inspection data. Computer?Aided Civil and Infrastructure Engineering, 30(4), 300-316.

- Alsabti, Khaled, Sanjay Ranka, and Vineet Singh. "An efficient k-means clustering algorithm." (1997).

- Jha, D., Alam, S., Pyun, J. Y., Lee, K. H., & Kwon, G. R. (2018). Alzheimer's disease detection using extreme learning machine, complex dual tree wavelet principal coefficients and linear discriminant analysis. Journal of Medical Imaging and Health Informatics, 8(5), 881-890.

- Kotsiantis, Sotiris B., Ioannis Zaharakis, and P. Pintelas. "Supervised machine learning: A review of classification techniques." Emerging artificial intelligence applications in computer engineering 160.1 (2007): 3-24.

- Bishop, Christopher M. Neural networks for pattern recognition. Oxford university press, 1995.

- LeCun, Yann, Yoshua Bengio, and Geoffrey Hinton. "Deep learning." nature 521.7553 (2015): 436-444.

- Ramachandran, R., Rajeev, D. C., Krishnan, S. G., & Subathra, P. (2015). Deep learning an overview. International Journal of Applied Engineering Research, 10(10), 25433-25448.

- Hubel, David H., and Torsten N. Wiesel. "Receptive fields and functional architecture of monkey striate cortex." The Journal of physiology 195.1 (1968): 215-243.

- Fan, J., Xu, W., Wu, Y., & Gong, Y. (2010). Human tracking using convolutional neural networks. IEEE transactions on Neural Networks, 21(10), 1610-1623.

- Aloysius, Neena, and M. Geetha. "A review on deep convolutional neural networks." 2017 international conference on communication and signal processing (ICCSP). IEEE, 2017.

- Hinton, G. E., Srivastava, N., Krizhevsky, A., Sutskever, I., & Salakhutdinov, R. R. (2012). Improving neural networks by preventing co-adaptation of feature detectors. arXiv 2012. arXiv preprint arXiv:1207.0580.

- Zeiler, Matthew D., and Rob Fergus. "Stochastic pooling for regularization of deep convolutional neural networks." arXiv preprint arXiv:1301.3557 (2013).

- Y. Gong, L. Wang, R. Guo, and S. Lazebnik, “Multi-scale orderless pooling of deep convolutional activation features,” ECCV, 2014.

- Zia, Maryam, and Hiba Gohar. "Using SVM and CNN as Image Classifiers for Brain Tumor Dataset." Advanced Interdisciplinary Applications of Machine Learning Python Libraries for Data Science. IGI Global, 2023. 202-225.

- Lin, M. (2013). Network in network. arXiv preprint arXiv:1312.4400.

- LeCun, Yann, Yoshua Bengio, and Geoffrey Hinton. "Deep learning." nature 521.7553 (2015): 436-444.

- Bengio, Yoshua, Patrice Simard, and Paolo Frasconi. "Learning long-term dependencies with gradient descent is difficult." IEEE transactions on neural networks 5.2 (1994): 157-166.

- Hochreiter, S. "Long Short-term Memory." Neural Computation MIT-Press (1997).

- Shukla, Anushka, Shivanshu Upadhyay, Priya Rachel Bachan, Udit Narayan Bera, R. V. Kshirsagar, and Neeta Nathani. "Dynamic Explainability in AI for Neurological Disorders: An Adaptive Model for Transparent Decision-Making in Alzheimer's Disease Diagnosis." In 2024 IEEE 13th International Conference on Communication Systems and Network Technologies (CSNT), pp. 980-986. IEEE, 2024.

- Rehman, Shafiq Ul, Noha Tarek, Caroline Magdy, Mohammed Kamel, Mohammed Abdelhalim, Alaa Melek, Lamees N. Mahmoud, and Ibrahim Sadek. "AI-based tool for early detection of Alzheimer's disease." Heliyon 10, no. 8 (2024).

- Brusini, Lorenza, et al. "XAI-Based Assessment of the AMURA Model for Detecting Amyloid–? and Tau Microstructural Signatures in Alzheimer’s Disease." IEEE Journal of Translational Engineering in Health and Medicine (2024).

- Deshmukh, Afif, Neave Kallivalappil, Kyle D'souza, and Chinmay Kadam. "AL-XAI-MERS: Unveiling Alzheimer's Mysteries with Explainable AI." In 2024 Second International Conference on Emerging Trends in Information Technology and Engineering (ICETITE), pp. 1-7. IEEE, 2024.

- Chaddad, A., Peng, J., Xu, J., & Bouridane, A. (2023). Survey of explainable AI techniques in healthcare. Sensors, 23(2), 634.

- Simonyan, Karen, Andrea Vedaldi, and Andrew Zisserman. "Deep inside convolutional networks: Visualising image classification models and saliency maps." arXiv preprint arXiv:1312.6034 (2013).

- González-Alday, Raquel, Esteban García-Cuesta, Casimir A. Kulikowski, and Victor Maojo. "A scoping review on the progress, applicability, and future of explainable artificial intelligence in medicine." Applied Sciences 13, no. 19 (2023): 10778.

- Nasser Al-Andoli, M., Chiang Tan, S., & Ping Cheah, W. (2022). Distributed parallel deep learning with a hybrid backpropagation-particle swarm optimization for community detection in large complex networks.

- Vimbi, Viswan, Noushath Shaffi, and Mufti Mahmud. "Interpreting artificial intelligence models: a systematic review on the application of LIME and SHAP in Alzheimer’s disease detection." Brain Informatics 11.1 (2024): 10.

Ashwini Pujari*

Ashwini Pujari*

Manasi Rajput

Manasi Rajput

Shruti Patil

Shruti Patil

10.5281/zenodo.14774029

10.5281/zenodo.14774029